Introduction

Things required for configuring :-

1. IAM User

2. S3 Bucket

3. IAM Role

4. Nodejs

5. Lambda Function

1. First create the IAM user and configure it in your system.

1. techs2resolve <--- Upload HappyFace.jpg in the bucket

2. techs2resolveresized

3. Install nodejs in your system :-

a) Create a file inside techs2resolve-lambda-test called index.js and paste the below code:-

b) Create a folder inside a directory techs2resolve-lambda-test called node_modules:-

5. Zip the index.js file and node_modules folder as CreateThumbnail.zip :-

To create an execution role

Open the roles page in the IAM console.

Choose Create role.

Create a role with the following properties.

Service – AWS Lambda.

Permissions – AWSLambdaExecute.

Role name – lambda-s3-role.The AWSLambdaExecute policy has the permissions that the function needs to manage objects in Amazon S3 and write logs to CloudWatch Logs

7. Create the function with the aws cli command :-

At this point your image is converted with the above command placed inside techs2resolveresized bucket.

Go to service open and S3 and select techs2resolve bucket.

Go to properties and select Event

Configure like below in the screenshot

As you can see in the above image we have resized images.

I have find the reference on AWS link :- AWS-S3-LAMBDA-SERVERLESS

That's it enjoy using it,

Please do Comments, Like and share.

In this tutorial I will show you how to configure the AWS Lambda for doing serverless image processing using AWS-S3 service.

Things required for configuring :-

1. IAM User

2. S3 Bucket

3. IAM Role

4. Nodejs

5. Lambda Function

1. First create the IAM user and configure it in your system.

Note :- I have provided administrative access. You also need to make sure that the bucket must be created in the same region.

2. Create two Bucket in the same region and upload file called HappyFace.jpg in techs2resolve bucket:-

Note:- You can rename any JPG file to HappyFace.jpg and upload it. or search on google and upload it.

1. techs2resolve <--- Upload HappyFace.jpg in the bucket

2. techs2resolveresized

Bucket name should be in the same order as above for example you created the first bucket:- example, the second bucket name must be :- exampleresized. Change the bucket name as per yours.

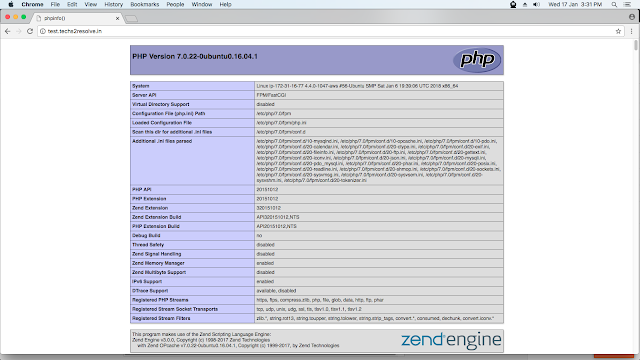

3. Install nodejs in your system :-

For MacOS high Sierra or later https://nodejs.org/dist/v10.13.0/node-v10.13.0.pkg For Ubuntu and linux sudo apt-get install curl python-software-properties curl -sL https://deb.nodesource.com/setup_10.x | sudo -E bash - sudo apt-get install nodejs -y

4. Open terminal and create a folder called techs2resolve-lambda-test or whatever you like in your system:-

mkdir techs2resolve-lambda-test

cd techs2resolve_lambda_test

a) Create a file inside techs2resolve-lambda-test called index.js and paste the below code:-

// dependencies

var async = require('async');

var AWS = require('aws-sdk');

var gm = require('gm')

.subClass({ imageMagick: true }); // Enable ImageMagick integration.

var util = require('util');

// constants

var MAX_WIDTH = 100;

var MAX_HEIGHT = 100;

// get reference to S3 client

var s3 = new AWS.S3();

exports.handler = function(event, context, callback) {

// Read options from the event.

console.log("Reading options from event:\n", util.inspect(event, {depth: 5}));

var srcBucket = event.Records[0].s3.bucket.name;

// Object key may have spaces or unicode non-ASCII characters.

var srcKey =

decodeURIComponent(event.Records[0].s3.object.key.replace(/\+/g, " "));

var dstBucket = srcBucket + "resized";

var dstKey = "resized-" + srcKey;

// Sanity check: validate that source and destination are different buckets.

if (srcBucket == dstBucket) {

callback("Source and destination buckets are the same.");

return;

}

// Infer the image type.

var typeMatch = srcKey.match(/\.([^.]*)$/);

if (!typeMatch) {

callback("Could not determine the image type.");

return;

}

var imageType = typeMatch[1];

if (imageType != "jpg" && imageType != "png") {

callback('Unsupported image type: ${imageType}');

return;

}

// Download the image from S3, transform, and upload to a different S3 bucket.

async.waterfall([

function download(next) {

// Download the image from S3 into a buffer.

s3.getObject({

Bucket: srcBucket,

Key: srcKey

},

next);

},

function transform(response, next) {

gm(response.Body).size(function(err, size) {

// Infer the scaling factor to avoid stretching the image unnaturally.

var scalingFactor = Math.min(

MAX_WIDTH / size.width,

MAX_HEIGHT / size.height

);

var width = scalingFactor * size.width;

var height = scalingFactor * size.height;

// Transform the image buffer in memory.

this.resize(width, height)

.toBuffer(imageType, function(err, buffer) {

if (err) {

next(err);

} else {

next(null, response.ContentType, buffer);

}

});

});

},

function upload(contentType, data, next) {

// Stream the transformed image to a different S3 bucket.

s3.putObject({

Bucket: dstBucket,

Key: dstKey,

Body: data,

ContentType: contentType

},

next);

}

], function (err) {

if (err) {

console.error(

'Unable to resize ' + srcBucket + '/' + srcKey +

' and upload to ' + dstBucket + '/' + dstKey +

' due to an error: ' + err

);

} else {

console.log(

'Successfully resized ' + srcBucket + '/' + srcKey +

' and uploaded to ' + dstBucket + '/' + dstKey

);

}

callback(null, "message");

}

);

};

b) Create a folder inside a directory techs2resolve-lambda-test called node_modules:-

cd techs2resolve_lambda_test

mkdir node_modules

c) The AWS Lambda runtime already has the AWS SDK for JavaScript in Node.js, so you only need to install the other libraries. Open a command prompt, navigate to the techs2resolve-lambda-test, and install the libraries using the npm command, which is part of Node.js.

cd techs2resolve_lambda_test

npm install async gm

5. Zip the index.js file and node_modules folder as CreateThumbnail.zip :-

zip -r CreateThumbnail.zip index.js node_modules

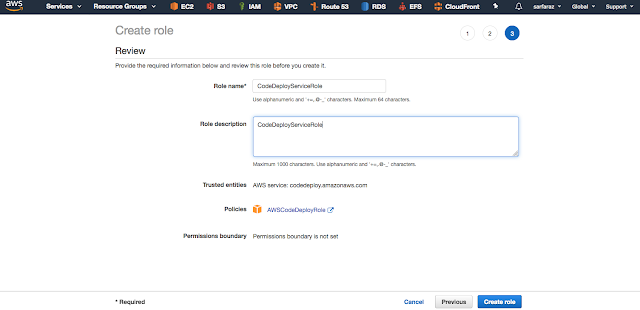

To create an execution role

Open the roles page in the IAM console.

Choose Create role.

Create a role with the following properties.

Service – AWS Lambda.

Permissions – AWSLambdaExecute.

Role name – lambda-s3-role.The AWSLambdaExecute policy has the permissions that the function needs to manage objects in Amazon S3 and write logs to CloudWatch Logs

7. Create the function with the aws cli command :-

Note :- You will have to get arn from the IAM role which you created. In the above screentshot Role ARN is mentioned.

cd techs2resolve_lambda_test

aws lambda create-function --function-name CreateThumbnail \

--zip-file fileb://CreateThumbnail.zip --handler index.handler --runtime nodejs8.10 \

--role arn:aws:iam::221794368523:role/lambda-s3-role \

--timeout 30 --memory-size 1024

8. Create a file called inputfile.txt and paste the below content :-

Change the bucket name as per your highlighted in red

vim inputfile.txt

{

"Records":[

{

"eventVersion":"2.0",

"eventSource":"aws:s3",

"awsRegion":"us-west-2",

"eventTime":"1970-01-01T00:00:00.000Z",

"eventName":"ObjectCreated:Put",

"userIdentity":{

"principalId":"AIDAJDPLRKLG7UEXAMPLE"

},

"requestParameters":{

"sourceIPAddress":"127.0.0.1"

},

"responseElements":{

"x-amz-request-id":"C3D13FE58DE4C810",

"x-amz-id-2":"FMyUVURIY8/IgAtTv8xRjskZQpcIZ9KG4V5Wp6S7S/JRWeUWerMUE5JgHvANOjpD"

},

"s3":{

"s3SchemaVersion":"1.0",

"configurationId":"testConfigRule",

"bucket":{

"name":"techs2resolve",

"ownerIdentity":{

"principalId":"A3NL1KOZZKExample"

},

"arn":"arn:aws:s3:::techs2resolve"

},

"object":{

"key":"HappyFace.jpg",

"size":1024,

"eTag":"d41d8cd98f00b204e9800998ecf8427e",

"versionId":"096fKKXTRTtl3on89fVO.nfljtsv6qko"

}

}

}

]

}

9. Run the following Lambda CLI invoke command to invoke the function. Note that the command requests asynchronous execution. You can optionally invoke it synchronously by specifying RequestResponse as the invocation-type parameter value.

aws lambda invoke --function-name CreateThumbnail --invocation-type Event \ > --payload file://inputfile.txt outputfile.txt

At this point your image is converted with the above command placed inside techs2resolveresized bucket.

10. Now configure the trigger in S3 bucket "techs2resolve" to automate this process :-

Go to service open and S3 and select techs2resolve bucket.

Go to properties and select Event

Configure like below in the screenshot

11. Upload the images in "techs2resolve" bucket and it will automatically resize with the help of lambda function.

As you can see in the above image we have resized images.

I have find the reference on AWS link :- AWS-S3-LAMBDA-SERVERLESS

That's it enjoy using it,

Please do Comments, Like and share.